Meta’s Oversight Board, the Supreme Courtroom-esque physique fashioned as a verify on Fb and Instagram’s content material moderation choices, acquired almost 1.3 million appeals to the tech large’s choices final 12 months. That mammoth determine quantities to an enchantment each 24 seconds, 3,537 instances a day. The overwhelming majority of these appeals concerned alleged violations of simply two of Meta’s group requirements: violence/incitement and hate speech.

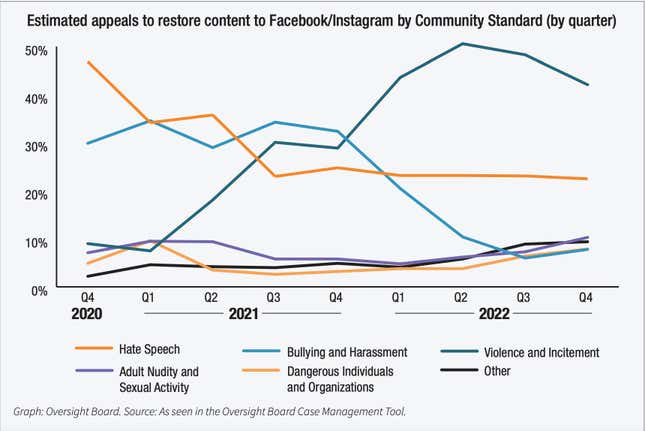

The enchantment figures are a part of the Oversight Board’s 2022 annual report, shared with Gizmodo Tuesday. Fb and Instagram customers appealed Meta’s determination to take away their content material 1,290,942 occasions, which the Oversight Board stated marks a 25% improve within the variety of appeals over the 12 months prior. Two-thirds of these appeals involved alleged violence/incitement and hate speech. The chart beneath exhibits the estimated share of appeals the Oversight Board acquired, damaged down by the kind of violating content material, because it began taking submissions in late 2020. Although hate speech appeals have dipped, appeals associated to violence/incitement have skyrocketed up in recent times.

The largest portion of these appeals (45%) got here from america and Canada, which have a few of the world’s largest Fb and Instagram consumer bases. American and Canadian customers have additionally been on Fb and Instagram for a relatively very long time. The overwhelming majority of appeals (92%) concerned customers asking to have their accounts or content material restored in comparison with a much smaller share (8%) who appealed to have sure content material eliminated.

“By publicly making these suggestions, and publicly monitoring Meta’s responses and implementation, we now have opened an area for clear dialogue with the corporate that didn’t beforehand exist,” the Oversight Board stated.

Oversight Board overturned Meta’s determination in 75% of case choices

Regardless of receiving multiple million appeals final 12 months, the Oversight Board solely makes binding choices in a small handful of high-profile instances. Of these 12 printed choices in 2022, the Oversight Board overturned Meta’s unique content material moderation determination 75% of the time, per its report. In one other 32 instances up for consideration, Meta decided its personal unique determination was incorrect. 50 instances whole for overview won’t sound like a lot in comparison with thousands and thousands of appeals, however the Oversight Board says it tries to make up for that disparity by purposefully deciding on instances that “elevate underlying points going through massive numbers of customers world wide.” In different phrases, these few instances ought to, in idea, handle bigger moderation points pervading Meta’s social networks.

The 9 content material moderation choices the Oversight Board overturned in 2022 ran the gamut by way of topic materials. In a single case, the board rebuked a choice by Meta to take away posts from a Fb consumer asking for recommendation on how one can discuss to a health care provider about Adderall. One other newer case noticed the Oversight Board overturn Meta’s determination to take away a Fb publish that in contrast the Russian military in Ukraine to Nazis. The controversial publish notably included a poem that referred to as for the killing of fascists in addition to a picture of an obvious useless physique. In these instances, Meta is required to honor the board’s determination and implement moderation adjustments inside seven days of the ruling’s publication.

Other than overturning moderation choices, the Oversight Board additionally spends a lot of its time concerned within the much less flashy however doubtlessly simply as vital function of issuing coverage suggestions. These suggestions can shift the best way Meta interprets and enforces content material moderation actions for its billions of customers. In 2022, the Oversight Board issued 91 coverage suggestions. A lot of these, in a technique or one other, referred to as on Meta to extend transparency in relation to informing customers why their content material was eliminated. Too typically, the Oversight Board notes within the report, customers are “left guessing” why they’d sure content material eliminated.

In response to these transparency suggestions, Meta has reportedly adopted new messaging telling folks which particular insurance policies they violated if the violations contain hate speech, harmful organizations, bullying, or harassment. Meta may also inform customers whether or not the choice to take away their content material is made by a human or an automatic system. The corporate modified its strike system to deal with complaints from customers that they have been unfairly having their accounts locked in Fb Jail.

Meta additionally made adjustments to the methods it handles crises and conflicts resulting from Oversight Board stress. The corporate developed a brand new Disaster Coverage Protocol which it used to gauge the doable threat of hurt related to reinstating former President Donald Trump’s account. Whereas some speech advocates just like the ACLU praised Meta’s determination to reinstate Trump, different rights teams just like the World Challenge In opposition to Hate and Extremism and Media Issues stated it was untimely. Equally, Meta additionally reviewed its insurance policies round harmful people and organizations to be extra risk-based. Now, teams or people deemed probably the most harmful are prioritized for enforcement actions on the platform.