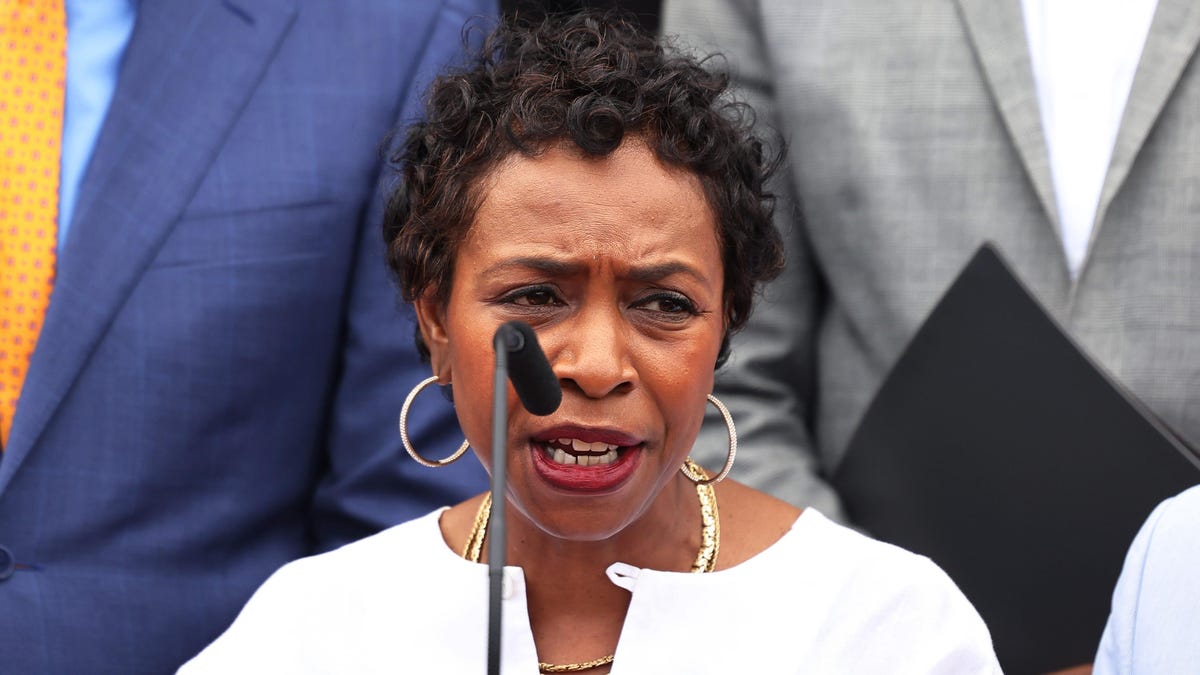

Rep. Yvette Clarke wasn’t precisely stunned when ex-President Donald Trump used an AI voice cloning device to make Hitler, Elon Musk, and the Satan himself be a part of a Twitter House to troll Florida governor Ron DeSantis earlier this yr. The previous president wasn’t fooling anybody with the doctored screenshot, however Clarke worries related political deepfakes will be weaponized to “create common mayhem” in what’s already shaping as much as be a maddening 2024 election season. With out correct disclosures, Clarke, who has spent years warning of the hazard of unchecked AI techniques, says a talented agent of chaos may even trigger voters to remain residence on election day, probably influencing an election’s final result.

“Tlisted here are other people in several laboratories, tech laboratories if you’ll, creating the power to destroy, deceive, disguise, and create common mayhem. That’s some folks’s 24-hour job. Congress hasn’t been in a position to sustain with a regulatory framework to guard the American folks from this deception,” Clarke stated in an interview with Gizmodo.

The New York Democrat believes her not too long ago launched REAL Political Adverts Act may clear up a part of that drawback. The comparatively simple laws would amend the Federal Election Marketing campaign Act of 1971 so as to add language requiring political advertisements to incorporate a disclosure in the event that they use any AI-generated movies or pictures. These updates, Clarke stated, are important to make sure marketing campaign finance legal guidelines maintain tempo with AI and stop additional erosion of belief in authorities.

“My thought may be very easy. It doesn’t brush up on First Modification rights. It’s merely to reveal, the place political advertisements are involved, that the commercial was generated by synthetic intelligence. Now, what occurs after that is still to be seen. I’m not right here to stifle innovation or creativity, or one’s First Modification rights,” she stated. She added that she is “not essentially satisfied” that Congress can have a severe dialogue of the ramifications of AI, however she is hopeful.

Since Clarke launched the laws in early Might, Trump, DeSantis, and even the Republican Nationwide Committee have all launched advertisements and different political materials fueled by generative AI. Although a lot of the examples to this point have come from one finish of the political spectrum, Clarke says the problem impacts everybody as a result of “deepfakes don’t have a celebration affiliation.”

Others are taking discover. On the state degree, legislators in greater than half a dozen states have proposed laws focusing on deepfakes used for all the things from political campaigns to youngster sexual abuse materials. On the federal degree, the White Home not too long ago met with leaders from seven AI corporations and secured voluntary agreements from them on exterior testing and watermarks. Clarke applauded the efforts as a “sturdy first step” in making certain safeguards for society.

None of that is essentially information to Clarke although. The congresswoman was one of many first lawmakers to suggest laws requiring creators of AI-generated materials to incorporate watermaroks. That was again in 2019, lengthy earlier than fashions like OpenAI’s ChatGPT and Google’s Bard grew to become family names. 4 years later, she is hoping her colleagues have taken be aware and can seize on the alternative to get forward of deepfakes and AI-generated content material earlier than it’s too late.

“Imagine if we wait for one more election cycle. By then there can be another iteration. Will probably be AI 2.0 or 2.5 and we’ll be catching up from there,” she stated.

The next interview has been edited for size and readability.

Deepfakes have been circulating in politics and the general public consciousness for a while. Why did you resolve this was the correct time to introduce the REAL Political Adverts Act?

Innovation has taken us to a complete new degree with synthetic intelligence and its means to, mimic, to manufacture situations, visually and audibly, that pose an actual menace to the American folks. I actually knew that after seeing the RNC [Republican National Convention’s] depiction of Joe Biden that it was on the market, that the weaponization was right here. In that case, they put a small disclosure on their video however I do know that there can be others who gained’t be as conscientious. There can be those that deliberately throw up fabricated content material to disrupt, confuse, and create common mayhem throughout the political season. We kinda obtained a way of that throughout the period of Cambridge Analytica and the 2016 elections so that is the next-gen if you’ll.

You’ve been on the forefront of proposed deepfake laws for years now, typically forward of a lot of the different members of Congress. What do you consider the sudden explosion of curiosity in generative AI and new evolving deepfake capabilities?

It was inevitable. Whereas I’m right here legislating, there are other people in several laboratories, tech laboratories if you’ll, creating the power to destroy, deceive, disguise, and create common mayhem. That’s some folks’s 24-hour job. So I’m not stunned, however Congress hasn’t been in a position to sustain with a regulatory framework to guard the American folks from this deception. The common particular person isn’t interested by AI and isn’t essentially going to query one thing that jumps into their social media feeds or is distributed in an e-mail by a relative.

I actually really feel like Congress has obtained to step up and no less than put a flooring to conduct on the web. In any other case, we’re actually coping with the Wild Wild West. My thought may be very easy. It doesn’t brush up on First Modification rights. It’s merely to reveal, the place political advertisements are involved, that the commercial was generated by synthetic intelligence. Now, what occurs after that is still to be seen. I’m not right here to stifle innovation or creativity, or one’s First Modification rights.

What’s the nightmare situation you see if deepfakes are allowed to affect politics unchecked?

We don’t need to be caught flat-footed when an adversary exterior of our explicit political dynamic, or a world adversary, decides to detonate a deepfake advert and injects it into our political discourse to create hysteria among the many American folks. Think about receiving a video on election day that exhibits flooding in a selected space of the nation and it says, at this time’s elections have been canceled so folks don’t vote. It’s not farfetched. I feel there are a complete host of nefarious characters on the market each domestically and internationally which are trying ahead to disrupting this election cycle. This would be the first election cycle the place AI generative content material is on the market to be consumed by the American folks.

We’re slowly seeing an erosion of belief and integrity within the democratic course of, and we’ve obtained to do all the things we will to undergird and strengthen that integrity. The previous few election cycles have despatched the American folks for a loop. And whereas some individuals are decided to carry onto this democracy with all thier may, there are a variety of people who’re turning into disaffected as nicely.

Deepfake know-how has gotten significantly better in recent times however there additionally appears to be a stronger urge for food for conspiratorial considering as nicely. Are these two components probably feeding off of one another?

We’ve reached a brand new excessive or a brand new low relying on the way you take a look at it of propagandizing our civil society. The issue with deepfakes is that you could create no matter situation you need to validate no matter principle you might have. There doesn’t must be a factual or scientific foundation for it. So those that need to imagine that there’s a toddler trafficking ring and a pizzeria in Washington D.C. can really depict that via a deep faux situation.

I feel the abrupt adoption of digital lives on account of the pandemic opened us as much as way more intrusion into our lives than ever earlier than. Some folks dibbled and dabbled with serps and with social media platforms earlier than nevertheless it wasn’t actually what they did all day. Now, you might have people who by no means go into an workplace. Expertise companies know extra about you now.

What kind of political urge for food is there amongst your colleague to maneuver ahead with AI regulation usually?

They’re much more acutely aware of it. I feel having the creators of AI techniques come out with issues about how their know-how can be utilized not just for good but additionally for nefarious pursuits, has additionally percolated amongst my colleagues. I’m getting a lot of suggestions from colleagues, primarily youthful colleagues and colleagues who’re Democrats. That’s to not say there aren’t these on the opposite facet of the aisle who’ve taken notes. I simply discover that the agendas for what we’re pursuing by way of coverage are divergent.

Divergent in what methods?

Nicely, proper now my colleagues on the Republican facet are doing tradition wars and we’re doing coverage. They [the Republican Party] dominate the agenda. We should always have a legislation of the land round information privateness by now. That’s the nugget, the algorithm that then creates the AI. It’s all constructed upon the person information that we’re consistently pumping into the know-how. Our habits is being tracked and primarily based on that merchandise are being developed and our personal info is used towards us.

This yr each the Trump and DeSantis presidential campaigns have already revealed deepfake political materials, some extra clearly works of parodies than others. What do these examples let you know concerning the second?

We’re right here. On the finish of the day my laws didn’t come out of skinny air, we knew what the capabilities of the know-how have been. Now we’re within the age of AI the place the quantity of mega information that goes into techniques is at your fingertip. I take into consideration the entire emails and social media hyperlinks that I obtain in a day. Individuals can be inundated with political content material and I’m positive a sure portion of it will likely be AI-generated.

How assured are you that Congress could make significant strides in limiting deepfakes earlier than it’s too late?

Nicely, I’m going to do all the things I can to proceed to shine a lightweight on and handle this situation via laws. My proposed laws is a straightforward answer and it must be bipartisan as a result of deepfakes don’t have a celebration affiliation. It may affect anybody at any time, anyplace. I’m not essentially satisfied that we’ll have a chance to take it up on this Congress. My hope is that within the subsequent Congress, we’ll have a set of insurance policies that basically addresses our on-line well being.

I’m open to any time limit that it will get carried out, however simply think about if we wait for one more election cycle. By then there can be another iteration. Will probably be AI 2.0 or 2.5 and we’ll be catching up from there. And it’s not relegated to at least one political occasion or one other. As a matter of reality, it’s not even relegated to simply People. Any supply from anyplace on the earth could be, disruptive. The North Koreans can become involved or the Iranians or the Chinese language, or the Russians. We’re all related.